DeepLTK is an award-winning fully LabVIEW-native toolkit that empowers researchers and engineers with intuitive, powerful tools to develop, validate, and deploy deep learning systems directly within LabVIEW. Completely developed inside LabVIEW, it is unique in the market and greatly simplifies integrating machine learning technologies.

Supporting tasks like image classification, object detection, signal recognition, and anomaly detection, DeepLTK enables efficient training and deployment on embedded CPUs, GPUs, and FPGAs.

Proven for over 10 years, it delivers reliable performance and long-term support across industrial domains including test and measurement, automation, life sciences, and embedded systems.

Key Features

-

Build, train, evaluate, and deploy deep neural networks

-

Save and reload trained models for deployment

-

Accelerate training and inference using GPUs and FPGAs

-

Visualize network architecture and monitor key performance metrics

-

Use built-in tools for debugging and performance analysis

-

Deploy models to various targets, including NI’s Real-Time systems

-

Explore over 20 ready-to-use examples for real-world applications

Requirements

Installation

The toolkit is distributed as a VIPM (VI Package Manager) installer, bundling the core library, documentation, and reference examples.

Development

-

LabVIEW 2020 (32-bit and 64-bit) and above.

-

LabVIEW 64-bit for GPU acceleration

-

-

Windows 10 and above 64-bit

Supported Network Architecture

-

MLP - Multilayer Perceptron

-

CNN - Convolutional Neural Networks

-

FCN - Fully Convolutional Network

-

ResNet, Wide-Resnet, ConvNeXt - Deep Residual Learning for Image Recognition

-

YOLO v2/v4/v8 – Real-time object detection architecture

-

U-Net - Semantic Segmentation

Supported Layers

DeepLTK supports a number of layers required to implement deep neural network architectures for common machine learning applications such as image classification, object detection, instance segmentation, voice recognition, anomaly detection and many more:

-

Input (1D, 3D)

-

Data Augmentation

-

Convolutional (Conv1D, Conv2D)

-

Fully Connected (Dense, Linear)

-

Batch Normalization (1D, 3D)

-

Layer Normalization

-

Scaler

-

Pool (maximum, average)

-

Upsampling

-

ShortCut

-

Concatenation

-

Dropout (1D, 3D)

-

Softmax

-

Object Detection (YOLO v2, v4, v8)

Applications

Ngene’s toolkits enable a wide range of AI-driven solutions across industries, delivering powerful capabilities in image analysis, signal processing, data prediction, and more to enhance automation, inspection, and analytics.

Image Classification

Automatically categorize images into predefined classes with high accuracy.

Object Detection

Locate and identify objects within images or video streams in real time.

Semantic Segmentation

Assign a class label to each pixel for detailed scene understanding.

Time Series Prediction

Forecast future values based on historical sequential data.

Voice Recognition

Convert spoken language into text for voice-driven applications.

Non-Linear Regression

Model complex relationships between variables for accurate predictions.

Anomaly Detection

Detect unusual patterns or defects without prior labeled data.

Tabular Data Analysis

Analyze and extract insights from structured numerical and categorical data.

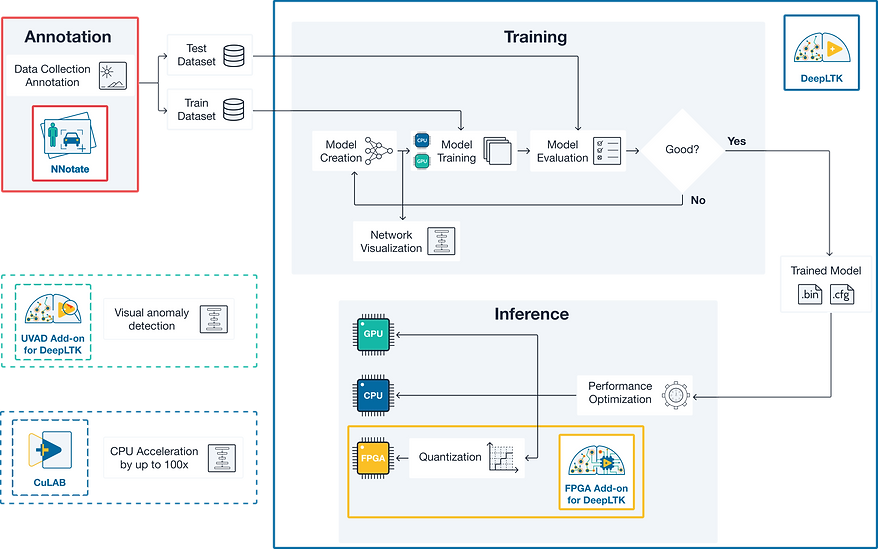

Complete Deep Learning Toolchain for LabVIEW

DeepLTK provides everything needed to build end-to-end deep learning workflows inside LabVIEW - from data preparation and annotation with NNotate, to model training and optimization, and deployment across CPUs, GPUs, and FPGAs.

With no need for external frameworks, DeepLTK enables seamless integration, faster development, and reliable performance for industrial AI applications.

How it works